Recent signals suggest rising attempts to centralize and align cultural memory:

- A White House directive seeks to reshape museum narratives under a policy to “restore” American history White House, March 2025.

- Reports of a “comprehensive internal review” across Smithsonian museums point to political content realignment NPR, Aug 12, 2025.

- Prominent AI leaders talk openly about “rewriting the entire corpus of human knowledge” before training models on it Business Insider, June 21, 2025.

We’re not here to rant. We’re here to help you mirror open, upstream sources—verifiably, with provenance—so what’s public today can’t be silently changed tomorrow.

Decentralizing access and preserving provenance supports research reproducibility, education, and resilience—regardless of politics.

This post introduces a practical set of scripts (Knowledge ARK) and shows how to run them fast. The code and brief docs live here: Knowledge ARK tutorials.

What the tools do

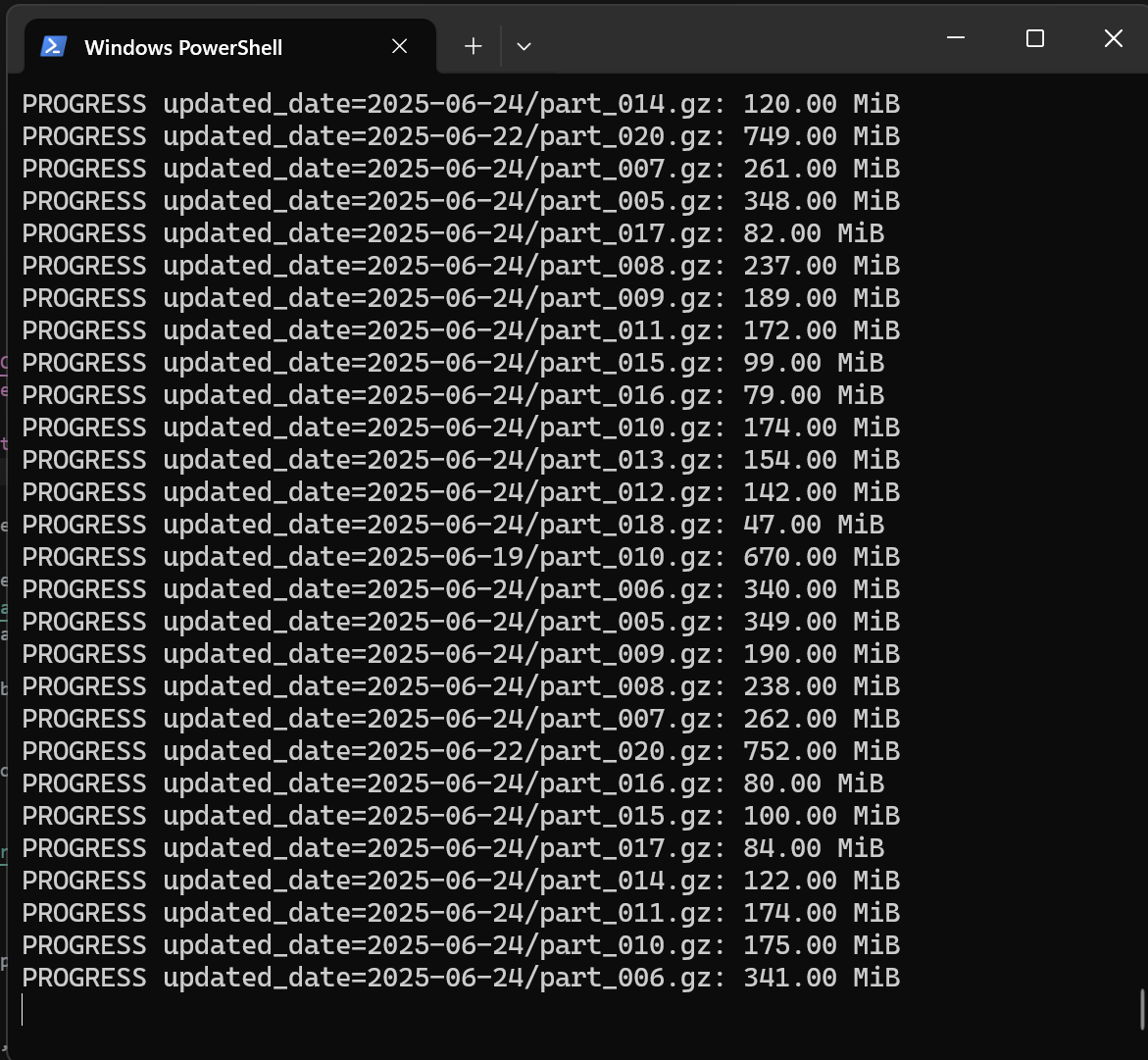

These scripts safeguard high‑value, open datasets. Each harvester pulls directly from upstream providers, writes immutable, date‑stamped snapshots, and emits a manifest you can verify.

- OpenAlex Works — about 200 million scholarly works across disciplines (scholarly metadata graph; resumable within a storage budget).

- arXiv PDFs — core open preprint corpus in science and CS (downloaded from Amazon S3 requester‑pays buckets — you cover bandwidth costs; date/prefix filters; budget guardrails).

- Wikipedia dumps — the canonical open encyclopedia (dumpstatus‑driven selection: pages‑articles‑multistream, indexes, abstracts, titles).

- Project Gutenberg — over 70,000 books (RDF‑driven selection of public‑domain EPUB/UTF‑8 text; configurable).

- Smithsonian Open Access — millions of openly licensed images and records (API‑based media + JSON metadata; free API key).

- US Data.gov — hundreds of thousands of federal datasets (CKAN search by query/org; resumable downloads).

All scripts write to an archive layout like:

<archive_root>/<source>/<YYYYMMDD>/raw/...

<archive_root>/<source>/<YYYYMMDD>/manifests/*.json

Verification workflow (at a glance)

Source → Snapshot (YYYYMMDD) → Manifest (hashes, counts) → Backup (S3/NAS/offsite) → Verify (checksums)

- Each run writes a manifest (

.../manifests/*.json) with file counts and byte totals. - Prefer immutable, date-stamped snapshots; don’t overwrite—add a new day’s folder.

- Keep a small

README.txtin each<source>/<YYYYMMDD>/noting the script version, exact upstream URL(s), and run parameters. - Store upstream license/provenance files alongside the data where applicable.

- For long-term copies, consider parity sets (PAR2) and multi-location backups.

Quick start

All data is collected from openly licensed or public domain sources, subject to each provider’s terms.

Prereqs (Windows, macOS, Linux):

python -m pip install --upgrade requests

Clone or download the scripts from: Knowledge ARK tutorials

Then run what you need, adjusting budgets and concurrency to your disk and network.

OpenAlex (works)

python get_openalex_works.py --max-gib 480 --concurrency 12

# If CDN DNS is flaky on your network, prefer S3 fallback:

python get_openalex_works.py --max-gib 480 --concurrency 12 --prefer-s3

arXiv (PDFs)

# Downloaded from Amazon S3 requester‑pays buckets — you cover bandwidth costs. Start recent, then widen.

python get_arxiv_pdfs.py --start-date 2025-01-01 --max-gib 300 --concurrency 12

Wikipedia (enwiki latest)

python get_wikipedia_dump.py --project enwiki --date latest --max-gib 300 --concurrency 12

Project Gutenberg

python get_gutenberg.py --max-gib 200 --concurrency 8 \

--languages en --media-types application/epub+zip "text/plain; charset=utf-8"

Smithsonian Open Access

export SMITHSONIAN_API_KEY="YOUR_KEY" # or set in your shell profile

python get_smithsonian_openaccess.py --max-gib 200 --concurrency 8

US Government Open Data (data.gov)

python get_usgov_datagov.py --query climate --max-gib 200 --concurrency 8

# or focus an agency

python get_usgov_datagov.py --org usgs-gov --max-gib 200 --concurrency 8

Why do this at home (or your lab)?

Centralized platforms can change, revise history, or attempt a pay-to-play. Your own mirror:

- Preserves upstream state at a point in time, with provenance.

- Ensures government overreach does not lead to a loss or manipulation of knowledge.

- Is resilient to policy shifts and outages.

- Lets you audit, research, and teach without permission gates.

- Ensures continued access even if upstream content is censored, paywalled, or removed.

Our goal with GnosisGPT is preservation, not revision. Start backing up the open corpus now, so no one can “align” it away later.

Resources cited: White House policy, NPR report, Business Insider.

Next steps

- After downloading, consider local indexing for exploration.

- Convert large JSONL to SQLite/Parquet (e.g., DuckDB or sqlite-utils) for fast queries.

- Stand up lightweight search (Elastic/OpenSearch/Meilisearch) on selected fields.

- Keep indexes versioned alongside each snapshot for reproducibility.